Artificial Kinaesthetic Intelligence

I investigate kinaesthetic expression as a means of relational, shared intelligence. Simulating or predicting the direction and velocity of movement that is in the process of being formed is part of any encounter between bodies, and as a form of situated action it is one foundation of intelligence itself.

For a more thorough discussion, see “Discerning Relational Data in Breath Patterns: Gilbert Simondon’s Philosophy in the Context of Sequence Transduction” in MATTER: Journal of New Materialist Research, “Artificial Relational Intelligence: Breath as Interface” in Culture Machine, and Breathing Down my Neck in Cambridge University Press’ special edition of TDR: The Drama Review.

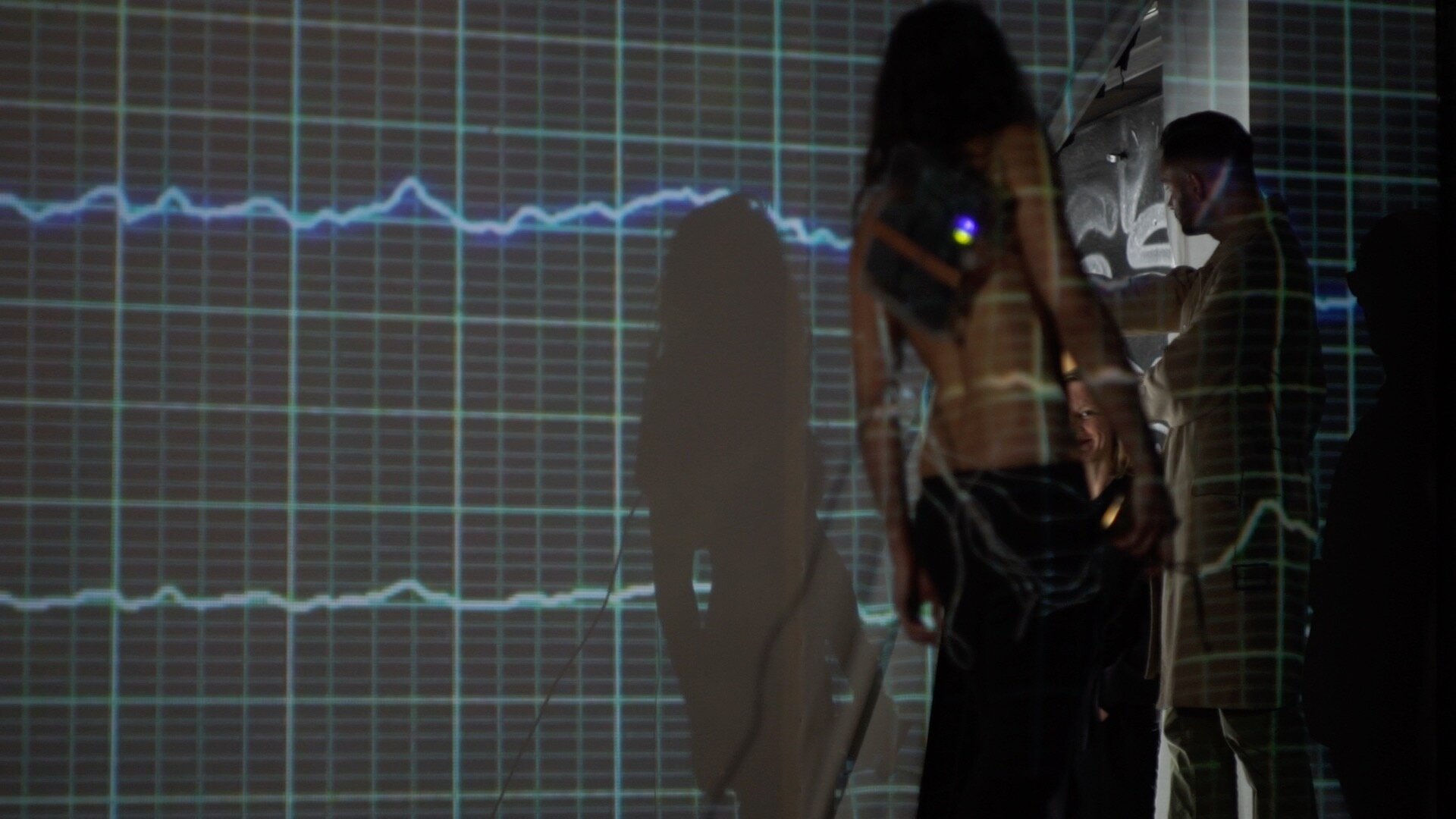

Synchronicities, 2023

14:39 min 6-channel 4k video, bodies, windows, algorithm, camera, wearable digital stethoscopes, sound recorders, timecode, attention, breaths, kinaesthesia, silence

Solo Exhibition, Zemeckis Center for Digital Arts, Los Angeles, USA, 2023

Relational Intelligence

Through the electromyography and audio signal processing of bodily sounds, I create mechanisms and situations which aid communication that does not rely on classifications such as words. These mechanisms make tangible the subtle somatic negotiations bodies perform in relation to one another.

Breath Dataset | Revealing Synchronicities

I have developed the hard- and software to conduct a form of breath analysis that reveals the non-verbal languages “spoken” synchronously by interacting bodies.

Below are samples of my dataset. For more information, please email mullertr[at]usc.edu.

The interface for breath analysis was exhibited at the International Society for Research on Emotion (ISRE) Conference, Los Angeles, 2022.

The Dataset was presented at The International Symposium on Electronic Art (ISEA), Barcelona, 2022.

(Headphones suggested)

Breath as Interface

Breathing informs an organism’s kinaesthetic awareness (i.e., the awareness of the body’s positioning) while, in turn, kinaesthesia informs the organism’s breathing. This subconscious feedback further connects interacting organisms as they organise their bodies somatically and coordinate their movement nonverbally. Within this coordination, I decipher patterns that amount to a form of language by analysing the organisms’ audible breaths with the help of signal processing,

Relational Intelligence in the Automatic Pause –

Non-fiction Gone WronG, 2022

live performance

At the 2022 International Society for Research on Emotion (ISRE) conference I asked an actor to present my paper to an unwitting audience. My paper, Relational Intelligence, was presented as part of the Affective Computing panel. Playing a fictional character of a sniper’s spotter, I blithely disrupted the presentation of “her” paper, saying that this research was beneficial to the profession of sniper-spotter duos as they are dependent on instantaneous communication and that the research could, hence, allow them to shoot more accurately. Walking it back, I emphasised that I was only spotting, not pulling the trigger – much like the university often claims to merely facilitate research rather than employ the tools that are invented.

The piece contextualizes how research is funded, specifically, research involving artificial intelligence, which far too often lacks ethical review processes. Complicit in the computational capitalism practiced at academic institutions, I seek to negotiate its horrors and allures in their inconvenient immediacy while exposing, celebrating, and problematising the meta-narrative’s immorality and parasitic resistance (Watkins Fisher).

Breathing down my neck, 2023

6:40 min 4k Video, four actors, three cameras, conference, algorithm, academic paper, affective computing panel, audience, synchronicities, punctured contemporary temporality, projector, breath of a spotter‘s sniper, breath of a snipers‘s spotter, breath of a victim, samosa

with Sophie Dia Pegrum, John Smith, Rahjul Young, and Amalia Mathewson

4k Video published in Cambridge University Press’ special edition of TDR: The Drama Review.

Violet Eyelids ↓